Universidad Politécnica de Madrid

Escuela Técnica Superior de Ingenieros

Industriales

Design and Optimization of Power

Delivery and Distribution Systems Using

Evolutionary Computation Techniques

Tesis Doctoral

Leonardo Laguna Ruiz

Master en Electrónica Industrial, Universidad Politécnica de Madrid

2012

Departamento de Automática, Ingeniería

Electrónica e Informática Industrial

Escuela Técnica Superior de Ingenieros

Industriales

Design and Optimization of Power

Delivery and Distribution Systems Using

Evolutionary Computation Techniques

Autor

Leonardo Laguna Ruiz

Master en Electrónica Industrial, Universidad Politécnica de Madrid

Director

Roberto Prieto López

Doctor Ingeniero Industrial por la Universidad Politécnica de Madrid

2012

Nowadays computing platforms consist of a very large number of components that require to be supplied with different voltage levels and power requirements. Even a very small platform, like a handheld computer, may contain more than twenty different loads and voltage regulators. The power delivery designers of these systems are required to provide, in a very short time, the right power architecture that optimizes the performance, meets electrical specifications plus cost and size targets.

The appropriate selection of the architecture and converters directly defines the performance of a given solution. Therefore, the designer needs to be able to evaluate a significant number of options in order to know with good certainty whether the selected solutions meet the size, energy efficiency and cost targets.

The design difficulties of selecting the right solution arise due to the wide range of power conversion products provided by different manufacturers. These products range from discrete components (to build converters) to complete power conversion modules that employ different manufacturing technologies. Consequently, in most cases it is not possible to analyze all the alternatives (combinations of power architectures and converters) that can be built. The designer has to select a limited number of converters in order to simplify the analysis.

In this thesis, in order to overcome the mentioned difficulties, a new design methodology for power supply systems is proposed. This methodology integrates evolutionary computation techniques in order to make possible analyzing a large number of possibilities. This exhaustive analysis helps the designer to quickly define a set of feasible solutions and select the best trade-off in performance according to each application.

The proposed approach consists of two key steps, one for the automatic generation of architectures and other for the optimized selection of components. In this thesis are detailed the implementation of these two steps. The usefulness of the methodology is corroborated by contrasting the results using real problems and experiments designed to test the limits of the algorithms.

Hoy en día las plataformas de cómputo consisten de un gran numero de componentes los cuales requieren diferentes niveles de voltaje y potencia. Incluso una plataforma pequeña como, un teléfono portátil, puede contener más de veinte cargas y reguladores de voltaje diferentes. Los diseñadores de los sistemas de distribución de potencia para estas plataformas necesitan ser capaces de proveer, en un tiempo muy corto, la arquitectura de potencia adecuada que optimice el desempeño, cumpla con las especificaciones eléctricas así como los requerimientos de coste y tamaño.

La selección adecuada de la arquitectura y los convertidores define directamente el desempeño de la solución dada. Por lo tanto, el diseñador necesita ser capaz de evaluar un número grande de opciones con el fin de saber con mejor certeza si la solución seleccionada cumple con los objetivos de tamaño, eficiencia y coste.

La dificultad de seleccionar la solución correcta se incrementa debido al hecho de que existen un gran número de productos de diferentes fabricantes usados para la conversión de energía. Estos productos van desde componentes discretos (para construir convertidores) hasta módulos completos para la conversión de energía que usan diferentes tecnologías de manufactura. En consecuencia, en la mayoría de los casos no es posible analizar todas las alternativas (combinaciones de arquitecturas y convertidores) que se pueden construir. El diseñador se ve forzado a seleccionar un número limitado de componentes con el fin de simplificar el análisis.

En esta tesis, con el fin de resolver las dificultades previamente mencionadas, se propone una nueva metodología para el diseño de sistemas de potencia. Esta metodología integra técnicas de cómputo evolutivo lo cual hace posible el análisis de un gran número de posibilidades. Este análisis exhaustivo ayuda al diseñador a definir de una manera muy rápida el conjunto de mejores soluciones y seleccionar entre ellas las que presentan un mejor compromiso en desempeño de acuerdo con la aplicación.

La metodología propuesta consiste en dos etapas: la generación automática de arquitecturas y la selección optimizada de componentes. En esta tesis se detalla la implementación de estos dos pasos. La utilidad de esta metodología se corrobora contrastando los resultados obtenidos usando problemas reales y experimentos diseñados para poner a prueba los algoritmos.

The future cannot be predicted, but futures can be invented.

Dennis Gabor

Computers are becoming more powerful every day.

However, the reality has changed from what the science fiction told us in the past decades. In the past, it was very clear that the key was to produce a powerful computer able to perform all the calculations that we require; a single super-computer with an unimaginable computational power, capable of reasoning further than humans. The reality is that there are technological challenges that we need to face before dreaming of that. One of those challenges is supplying energy to these computers.

Science fiction books have told us how in the future computers and energy sources will be like. However, they do not provide us with a clue about how the energy conversion technology will be on those days. Maybe in that future, researchers have already found a way of transforming the energy without consuming it. Meanwhile, it is time for us to fix the mistakes that we have made in the past.

Some years ago, energy efficiency was not a priority. Engineers were busy trying to make the things work, and they assumed that the energy will be there when the time comes. In most of the block diagrams (describing the behavior of a system), the power system was not even part of the diagram. Some people realized that there could be environmental benefits in making the power systems more efficient. But by that time, the environment was not a priority. Therefore, they had to prove that there could be also economic benefits in energy saving.

New problems have emerged. The single-unit super-computer has split into millions of smaller interconnected entities. We have PCs (Personal Computers) capable of doing very complex tasks in the commodity of our home. Each of these computers consumes a small fraction of energy, but because there are millions out there, they represent a significant impact.

These PC have split also into a big variety of smaller groups called netbooks, cellphones, smartphones, MP3 players, etc. These devices are not static in our homes; we can carry them with us every day. Every time the computing units change, the paradigms also change and we have new problems to face.

We want the computers to be cheaper, smaller, faster, lighter and cuter; simply better. And to achieve that, we need to learn how to make a better use of the things that we have. It could be good to slow down the development of new fancy products, and focus all our effort in doing the things better. Focus our efforts in design optimized systems considering as first priority improving our quality of life. Our next concern should be improving the “life-time” of the computing devices; this will bring both ecological and psychological benefits.

Contradictorily, commercial applications require that the products are developed in a very short time. And many cases it is necessary to sacrifice an excellent feature that may improve the device life-time or energy efficiency, because it costs more money and the sells will be affected. Companies have to fight hard in order to be competitive and survive.

Despite the technological wars, the massive production of consumer products, like MP3 players, smartphones and videogames, has brought good things1 to our lives. For example, the industry of videogames has driven the manufacturers of visualization technology to develop low cost solutions. These low cost visualization technologies have been used in many medical applications that are saving human lives.

There is no evil in technology, only ignorance. And even when many science fiction authors have imagined how the society will shaped by the technology in a far future. We don’t know how each small step that we achieve will affect us, neither how many stages we have to pass before becoming a society full of prosperity.

Simplicity is a great virtue but it requires hard work to achieve it and education to appreciate it.

And to make matters worse: complexity sells better.

Edsger W. Dijkstra

Nowadays computer aided design is a necessity in most areas of engineering. Every day we tend to make more complex designs1 and we require simpler tools that help us achieve our tasks. In some areas of electronics, like design of digital systems, it is practically impossible not using a computer and designing system with a common complexity.

In order to handle this complexity, researches have been creating new perspectives that help us see the problems in a more friendly way. In the case of electronics, around the seventies the SPICE simulator was created. This simulator has played an extremely important role in the design of electronic systems. The idea behind SPICE is to simulate the final implementation of a circuit. For that purpose, it provides us with “virtual” components that we can interconnect and let them interact. The result is a very accurate simulation of the circuit behavior.

SPICE is a tool for simulating the final implementation and is not meant to replace the calculations needed when designing a circuit. SPICE can be considered rather a verification tool than a design tool. In the design of power converters SPICE is widely used because it is possible to reproduce the behavior of the switching semiconductors. However, these simulations may take a long time what makes very difficult to design a power converter using only SPICE.

In the area of design tools for electronics, we can find a large variety of tools that solve specific problems. One good example of how to solve a complex problem by following a methodology is the design of analog filters. For example, for tools to design analog filters we have FILTER-DESIGNER from TI [Texas Intruments, 2011], FilterLab from Microchip [Microchip, 2011] and FilterCAD from Linear Technology [Linear Technology, 2011] to mention a few. By using these tools, a simple way to design an analog filter will require (at least) three steps:

Following these steps will maximize the chances of succeeding.

These three steps can be considered a top-down methodology. In the beginning of the design we have only the specifications. Using these values as input, the filter design tool will suggest us an appropriate filter structure and the components that we should use. If we are satisfied with the solution, we can concretize the problem and start with the implementation into an electrical simulator. The electrical simulator will use more detailed models. This will help us to determine if we may have other problems like saturation and distortion. Once we are satisfied, we can concretize the model further and implement the real circuit.

Using top-down methodologies has many advantages when designing large systems. In the area of computer science, software complexity has been a problem since the seventies. Software was becoming so complex that it was hard to develop and maintain. Experts realized that they needed better tools (programing languages, editors, etc...) and better methodologies (based on paradigms like structured programming and object-oriented) [Schach, 2007].

In the area of power electronics, complexity of the designs is also increasing. Power supply systems are evolving from a few converters connected to dozens. This increase in the complexity is an effect of the evolution of the systems demanding energy. Power systems are becoming more distributed, consisting of many converters supplying energy to a large number of loads. This makes more difficult to the designer achieving the right power system design.

Manufacturing and construction industries originated what is called the “waterfall model”. This model specifies a series of steps that we need to follow in order to deploy a product. The typical steps are the following: Requirements, design, implementation, verification and maintenance (see Figure 2.1). The name waterfall comes from the fact that, after finishing one step, we cannot go back; waterfalls flow in only one direction. In many industries, if we need to return to a previous step, for example from implementation to design, the cost that it implies may be prohibitive.

In other industries, like software, changes are less expensive. For that reason, modified approaches have emerged. Each step we can acquire knowledge, we can return one step and feedback that knowledge in order to improve our design. This approach is shown in Figure 2.2.

In industries of electronic products, performing changes once the board is implemented are very costly. For that reason we need to be sure that the design we have selected satisfies all the requirements and is optimal. For that purpose we use electric circuit simulators like SPICE.

It is very important to use the appropriate tools. When we are designing a power converter the main concerns are:

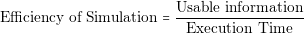

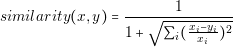

In order to guarantee these two points, the designer should perform a detailed analysis of the converter. The designer needs to know in detail the behavior of each current and voltage in the components. The most effective way of knowing that information is through simulations. However, contrary to common belief, simulations are not for free. Building a simulation model and running it consumes time and money. We can introduce the concept of the Efficiency of Simulation as follows: the more usable information we obtain over the time we spent, the more efficient a simulation is.

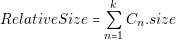

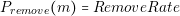

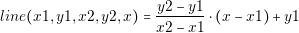

| (2.1) |

To exemplify, 3D finite element simulations (FEA) of magnetic components may take many hours to complete. This makes the analysis of many options inefficient since we have to spend a long time simulating each possible solution. In order to make efficient FEA simulations, we need to design our component by using other methods, and using the FEA simulator only as a validation tool.

The execution time of a model defines the feasibility of using a simulator as a design tool or as a validation tool. Power supply systems are comprised of more than one converters and loads. That make unfeasible using SPICE like tools to design a complete power supply system.

In the following section the main problem treated in this thesis is presented.

In engineering, designing optimal systems considering more than one objective is a complex task to achieve without the use of computers. Consider as an example the optimization of a power converter in which we want to select the appropriate inductor, switching frequency and semiconductors in order to maximize its efficiency and reduce its size. The first thing that we need to do is defining equations for efficiency and size that depend on the parameters to optimize. Once we have the equations, we have to calculate these values for different options of semiconductors, switching frequency and inductor values. When we have the results, we need to determine which of those solutions presents minimum losses and minimum size.

Deriving the equations for efficiency and size is a complex task that can be the topic of a complete PhD thesis. In addition, in order to have a degree of certainty that our solution will really be optimal, we need to test a wide range of components.

This same complexity is translated to the design of power systems. If we want to design a system comprised of more than one converter, the difficulty of the problem may grow to the point in which is completely impractical to use the same models, methods and simulator used in the design of converters. Consider the design of a power system for a server computer. This type of platforms requires energy supply to one or more processors with multiple cores, memory banks, hard drives and many other peripherals. We need to define new methods to handle complexity.

One way of simplifying is to consider the power converters as building blocks. This way we can use the converters without knowing the precise behavior of each component inside it. This simplification allows the designer to focus on optimizing the most important characteristics of a power system:

Using building blocks like commercial and pre-designed converters makes the optimization process simpler. However, creating an optimal design is still challenging. The main difficulties that the designer will find are the following:

Some manufacturers like National Semiconductor have created tools like WEBENCH Power Designer [National Semiconductor , 2011] to solve part of these problems. This tool is able to design power systems using their own products. This tool is able to find solutions for regular sized architectures and uses a weighted approach in order to classify solutions.

The main disadvantage is that, for commercial reasons, only National semiconductor products can be used. This can be very constraining since manufacturers may not want to use only one type of products in their designs. In addition, we could find better solutions by combining the best products from different converter manufacturers.

It is possible to define a new methodology to help the power system designer to make the decisions that have more impact to the cost, size, and energy losses of the power system. The designer will be able to determine:

These results can used by the designer in different ways. We have mainly the following cases:

This methodology in order to be useful needs to fulfill the following characteristics:

In order to create a tool better than the existing ones, we need to provide:

Designing a power system requires a different number of steps depending on its type. In Figure 2.3 we can observe a visual representation of a top-down methodology used for the design of a power system. Following this approach, we have to define the specifications at first instance (level 1). Based on those specifications, we have to create a design (level 2), and if we have the appropriate simulation technologies, we can perform a system-level validation (level 3). At this step, if the design is not satisfactory, we can always return to level 2 and create a new one.

The tasks that the designer has to perform in level 2 are not well defined because they depend on the designer’s preferences, experience and type of system to design. However this step is fundamental. The designer has to be sure that the solution he is selecting is the most appropriate before continuing with the following step.

The proposed solution splits the design level into two intuitive tasks (see Figure 2.4): definition of the architecture and selection of converters. For each task we have to provide an adequate design and validation tool that can assist the designer through the whole process.

One of the biggest advantages is that, by using these design and validation tools, the designer will be able to create a reliable design in a more efficient way. We can observe an analogy of this process in Figure 2.5. Without using the adequate tools during the design task, we are taking a slow an long way ( see Figure 2.5.a). The proposed approach provides two shorter and faster routes that the designer can take repeated times until he find the adequate solution (see Figure 2.5.b).

In order to create the methods and tools necessary to fulfill the proposed solution, we need to solve the following problems:

The architecture search and component selection algorithms should have the following characteristics:

The work presented in this document has been constrained to the design of power supply systems comprised of DC/DC converters. Nevertheless, the methods can be applied to AC systems since the methodology does not rely on the modeling approach used. The only requirements for the models are that we should be able to calculate the energy losses, size and cost of the power architecture.

The accuracy of the results obtained depends directly in the accuracy of the models used. If the designer uses incorrect information to create its models, he will obtain incorrect results. Capturing models from converters is a critical step, hence we have to provide the adequate tools to simplify and reduce the chances of making mistakes.

In the case of size and cost calculation it is not important to obtain an accurate value. What is important is to obtain a relative value that allows us to determine whether one option is more expensive or bigger than other.

In the following section we will present a set of ideas that will define the way this work is performed.

This section presents the ideas considered to build the scientific paradigm used in this thesis. The main purpose of describing them is to help the reader to understand the motivation, the methods and the interpretation of the results presented in this document. This section consists of three parts. In each part one idea is presented by using a series of examples trying to clarify its importance.

Learning has a cost, but ignorance cost even more. This idea has been explored in economics for a long time. Researchers have found this relationship in a very simple way (figure 2.6): the more knowledge we acquire in early stages of the design, the more flexibility we have and less money is spent [Fabrycky and Blanchard., 1991].

Buildings cannot be designed on the run. Consider the following example. When designing a bridge, it is necessary to perform many simulations in order to know how it will behave under different conditions. That was the case of the famous Tacoma Narrows bridge [Prelinger Archives, 2011]. The original Tacoma Narrows bridge (opened in July 1940) collapsed because the vibrations produced by wind, what made the bridge resonate in its natural frequency causing its destruction. At that time, that phenomenon was unknown. Since then this type of analysis is performed in big structures and no one will build it before being completely sure about how will it behave.

In electronics there are many known effects that we have to test before being sure that our design will not fail. By using the appropriate tools we can simplify this task. With the proposed methodology we try to help the designer to acquire more knowledge about its system. This will provide him more flexibility as [Fabrycky and Blanchard., 1991] states and a reduction in the design time and cost.

There exist persons capable of performing very complex calculations in a fast way using only its brain power. An alternative to all of us that do not have that ability is to use a computer equipped with the appropriate tools. Up to this year (2011), computers cannot be considered intelligent. However, computers can behave in a way that may appear intelligent. Playing chess is an activity associated with intelligent people. But since 1997 computers have proven to be smarter than the best human players. The most famous case is the match between the World Chess Champion, Garry Kasparov, against the computer Deep Blue [Hsu, 2002]. After that, computers have shown in repeated occasions that they are better than humans in playing chess.

A computer with the adequate programs can perform many tasks faster than humans. For that reason is important to distinguish which are the activities that require human intelligence and which can be performed in an autonomous way by the computer.

In this thesis want to create the necessary methods, tools and programs that can turn a computer into a fast calculation machine designated to create power systems. We are not trying to compete against human designers, but to help them to make better designs.

Like Voltaire said: “Doubt is not a pleasant condition, but certainty is absurd”. Very often, (as engineers, researchers or scientist) we tend to forget these wise words. Lack of information can make us think that we have a good solution, since we do not have reference points. Our level of certainty can be high by ignoring other possibilities. On the other hand excess of information can overwhelm us making us hard to define a good solution. As designers we have to avoid falling in any of these conditions. We can achieve that by using appropriate methodologies and measuring mechanisms; using a good strategy.

Take as example the problem of the “Fog Creek Programmers” (Posted on April 16, 2010 [Tech Interview, 2010]). In this problem, an assassin lines 100 programmers and puts on each of them a hat that can be red or blue (50 red and 50 blue). They can’t see their own hats, but they can see the hats of the programmers in front of them. The assassin starts with the programmer in the back and asks him “What color is your hat?” if the programmer gives an incorrect answer, the assassin kills him and continues with the next in line. The problem consist on determine how can we save most of the programmers. Since we don’t know how is going the assassin to put the hats, we do not have certainty that we will save them all. The only thing that we can do is to define a good strategy. The simplest strategy is to tell the programmer to answer always the same color, that way we can save the 50% of them. There exist other strategies that can be used to save more programmers but we are not going to cover them in this document.

In this work we try to increase the level of certainty by using a good strategy. This strategy is the central idea of the proposed methodology.

This thesis is organized in three parts:

Background: The main objective of the chapters within this part is to present a summary of the methods and techniques necessary to understand the foundations of this thesis. In these chapters we present the state of the art and works related to metaheuristic optimization algorithms and behavioral modeling.

New Techniques for the Automatic Design of Power Supply Systems: in this part we present the proposed methods for the automatic search of power architectures and converter selection. This section is split into two chapters, one focused on the architecture generation algorithms and other focused in the converter selection. Each chapter contains a validation section where the experimental results are displayed. This part contains the central work of this thesis.

Application Example of the Presented Techniques: this part contains a more practical validation of the methods proposed. We have included examples of real power. These examples were created with the purpose of covering the main design cases.

All models are false but some models are useful.

George E. P. Box

This chapter presents a review of the modeling methods that can be used to perform fast simulations of power systems. This chapter starts describing the key factors that affect the execution time in complex models and introduces a few techniques that we can use to reduce this time.

The second section is focused on the modeling techniques that will allow us to characterize the energy loss of a power converter. Once we are able to calculate the energy loss of a converter, we can calculate the efficiency of a complete power architecture. In the third section it is presented the modeling methodology used to calculate the cost and size of the architectures,

In this section is presented a summary of the key points that need to be considered in order to perform fast and effective simulations. The time that a simulation of dynamic systems takes to complete (execution time) depends mainly on two factors (Figure 3.1):

These two factors are described with more detail in the following subsections.

Simple models can be solved by simple methods. Complex models, on the contrary, may require sophisticated (and slower) methods to achieve a solution. The model complexity can be divided into two categories: the number of elements that contains the model, and the complexity of equations. The number of elements (in most simulators) directly defines the number of equations that the simulator has to solve. Solving a system with a large number of equations implies performing a large number of calculations what is reflected in a slower simulation. One option to make simulations faster is to reduce the number of equations. To that end, some simulators try to reduce the number of equation by symbolically simplifying the described model before creating its final representation. Some examples of these simulators are Modelica language [Modelica Association, 2011] simulators. In this type of simulators, the model is preprocessed in order to obtain a representation that is easier to solve. Consider the example on Figure 3.2. Figure 3.2.a contains seven resistors while the circuit in Figure 3.2.b contains only one. The model with seven resistors will take more time to simulate1. If we are interested in knowing the current that the voltage source provides, we can simplify the circuit with seven resistors to the circuit with one. That way we will obtain a circuit that simulates faster and we still obtain the information that we need.

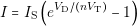

When the model contains only linear equations, solving it requires simpler methods, like Gaussian elimination. However, if the model is nonlinear, it requires using methods that are computationally more expensive, like Newton-Rhapson. In order to simplify the simulation of models with complex equations, we can use piecewise linear models. The model of a diode is given by the following equation:

| (3.1) |

In this equation we can see a nonlinear relation between the voltage (V D) and the current (I) of the diode. However, in many cases, like the simulation of power rectifiers, the ideal model of a diode is good enough to provide useful results. The equations of the ideal diode are the following:

| (3.2) |

| (3.3) |

The second factor that defines the execution time is the number of calculation points. The number of points depends on two parameters: the simulated time and the dynamic of the model. It is important to notice that we are using two names that are similar but define different concepts. The simulated time is the virtual time that is being simulated using the model. Nevertheless, the execution time is the time the computer takes calculating the results. For example, the typical simulated time of a power converter is around milliseconds, but the actual execution time can be several minutes.

The dynamic of the model defines how fast the model variables change. If the variables never change, the systems is static and it is necessary to calculate only one point. A model with very high dynamics (high frequencies) will require more calculation points than a model with low dynamics2.

If a model has low dynamics, it is possible to make the simulation very fast. On the other side if the model has high dynamics (high frequencies) and a long simulation time, the simulation will take a lot of time.

By a rule of thumb, it can be stated that a model is faster when less details are simulated (Figure 3.3). If we want to improve the simulation speed, it is necessary to lose some details of the model by making some variable negligible. This led us to lose some accuracy, but this is not bad in all cases. In order to obtain a fast model, it is necessary that the model simulate only the required information according to the test.

In power electronics the typical techniques to make faster simulations are:

These two techniques can improve drastically the speed of a simulation. However, when simplified semiconductor models are used, the information lost is what happens when the semiconductors switch. Averaging techniques drop completely the information produced when the converter change of state, yet this models are particularly useful when we want to design control loops..

Other technique to obtain fast simulation models is the behavioral modeling. The main idea of behavioral models is to define a simpler model that mimics the input-output behavior of the real system during a certain number of tests. This simpler model may reduce both, the model complexity and the number of calculation points.

The application of this technique to the simulation of power systems is presented in next section.

The application presented in this document requires modeling and simulation approaches that enhance the following characteristics:

Usually, capturing the model of a converter is not an easy task. This is mainly because the typical modeling approaches require a detailed knowledge of the converter e.g. the topology and the values of the components. These modeling approaches are not suitable for this application because capturing a large number of converters can be time consuming and error prone. In addition, averaged models are still complex and slow for this application.

Behavioral models are the best choice for this application. The main reasons are the following:

These models also present limitations. The first one is that the models are developed to behave in a specific way under given conditions. For example, if we do not include the protections of the converter, the model will not turn-off when an over-current occurs. Therefore, the user should be careful in order avoid any misunderstanding of the simulation results.

Typical power supply architectures used in mobile devices may include the following components:

Each of these elements has different levels of interaction. Depending on the level of detail modeled, the physical effects that involve may acquire more or less importance. For example, when the losses are calculated, the effect of the protections and EMI filters is minimal.

The Table 3.1 shows a summary of the different components of a power architecture and the modeling level at which its effects cannot be diminished. Depending on the information that we want to know about the system, a different modeling level may be more suitable.

| Modeling Level | Converter | Protections | Filters | Sources | Loads |

| Static | ⋅ | ⋅ | ⋅ | ||

| Dynamic | ⋅ | ⋅ | ⋅ | ⋅ | |

| Event-Driven | ⋅ | ⋅ | ⋅ | ⋅ | ⋅ |

| High Frequency | ⋅ | ⋅ | ⋅ | ⋅ | ⋅ |

In the current application, it is necessary to calculate the cost, size and losses of the power supply system. Therefore, it is possible to calculate these three parameters without entering to the full detail of the converter behavior. As mentioned in the previous section, by reducing the level of detail it is possible to obtain simple and fast models that recreate the physical effects of importance. In this case, we are taking into account the following considerations:

These two considerations reduce the requirements of the models. We can obtain all the things that with need to calculate by using static level models. Other consequence is that we can model the protections of the converter at a functional level. This means that if the converter operates at steady state, no protection should be on. On the other hand, if a protection is on, even in a steady state simulation, this means that the converter is not appropriate and should not be used.

Considering that we want to simulate the power architecture at a static level (steady state), it is possible to simplify the problem by grouping the effects of the filters and protections. This lead us to the conclusion that we only need to create models for the converters, the loads and the sources.

In the following subsections are presented the details of how to model these components.

The behavioral modeling of DC/DC converters has been presented by [Oliver, 2007]. This model is based on the Wiener-Hammerstein structure (Figure 3.4). The structure consists of three blocks that can simulate complex dynamic and nonlinear behaviors. The input and output linear network is used to simulate the dynamic behavior, in the case of a power converter, these block simulate the inrush current and output voltage transient response. The static block simulates the nonlinear power transference of a converter. More details about the complete features of these models can be found in [Prieto et al., 2007, Oliver et al., 2008b, Oliver et al., 2008a].

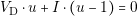

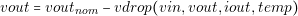

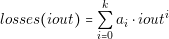

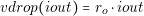

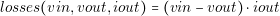

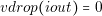

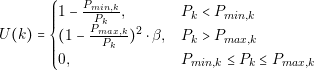

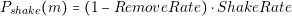

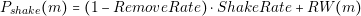

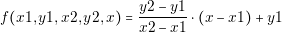

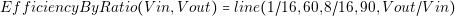

In this application it is only used the static model since transient behavior and EMI is negligible at this level of abstraction. Therefore, the behavioral model of a DC/DC converter is simplified to the following equations:

| (3.4) |

| (3.5) |

Using equation-based modeling, we can create complex models by connecting small models. Each small model has its own equations and new equations are generated each time we connect one of its pins. This approach allow us to represent me models as typical electric components. Figure 3.5 shows the equivalent electric model to equations (3.4) and (3.5).

It can be seen in equations (3.4) and (3.5) that the function losses and vdrop may depend in variables as input voltage, output voltage, output current and temperature. Obtaining these two functions can be quite challenging because they represent a five dimensions surface. These two functions can be simplified depending on the information available or the type of converter. In order to simplify these functions the following assumptions are considered:

Taking into account the previous points, we can approximate the model losses and vdrop of a typical DC/DC converter by a very small model, without a significant loss in accuracy:

| (3.6) |

| (3.7) |

In equation (3.6) the losses are approximated by a polynomial function. Figure 3.6 shows a comparison between the modeled losses of a converter using a polynomial and the actual measurements. The term ro in equation (3.7) represents the output voltage drop due conduction. This value in some cases may be negligible.

The behavior of linear regulator can be approximated using equations (3.8) and (3.9).

| (3.8) |

| (3.9) |

First it is assumed that the linear regulator has a perfect regulation, that is the reason why in equation (3.9) the voltage drop is 0. In addition, the losses are practically defined by voltage difference and the output current (equation (3.9)).

In most commercial modules it is possible to use one specific converter with more than one input voltage and output voltage configurations. In these cases, we recommend to create more than one model to represent each behavior of the converter. Usually the manufacturers provide efficiency curves for different input/output voltages. From these efficiency curves it is possible to obtain models with a very good level of accuracy for each of these configurations.

If we require models with a higher level of complexity, we can obtain the losses and vdrop function by using other analytic methods. [Elbanhawy, 2006] and [Das and Kazimierczuk, 2005] present two approaches based on the calculation of the losses by individual parts: switching, conduction and circulating energy. These approaches can be used specially on ad-hoc designs before a prototype is constructed and measured.

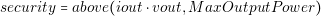

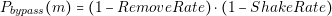

Until this point, we have presented only the electrical model of the losses. However, it is possible to add other features to this model, for example, equations to determine if the converter is operating outside its maximum specifications and other characteristics like the output impedance. It is possible to detect if a converter is providing more output power than its maximum defined by adding the following equation to the model.

| (3.10) |

In equation (3.12) the function above is defined as follows:

| (3.11) |

Therefore, the security value of a converter is 1 if the output power (iout vout) is greater than the maximum output power of the converter.

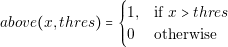

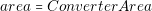

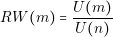

The value of area and cost of the converters are constant. Thus it is very simple to model with trivial equations this behavior (equations (3.12) and (3.13)).

| (3.12) |

| (3.13) |

To summarize, in Tables 3.2, 3.3 and 3.4 are presented the necessary equations to model typical converters and linear regulators.

| vin � iin � vout � iout � losses�iout� |

| vout � voutnom � vdrop�iout� |

| security � above�iout � vout,MaxOutputPower� |

| area � ConverterArea |

| cost � ConverterCost |

| losses�vin,vout,iout���vin � vout�� iout |

| vdrop�iout�� 0 |

| losses�iout��Pi�0kai � iouti |

| vdrop�iout�� ro � iout |

In the following section are presented the models of the loads and sources. These models are developed with the same objective of obtaining very small and fast models.

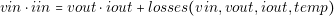

Loads and sources have very simple models. They are based on the electrical model shown in Figure 3.7. When the voltage source is a battery, we can consider Battery Capacity as a parameter. We can use this parameter to estimate the autonomy of the system. We are not considering at this simulation level effects like aging of the battery and output impedance. The voltage source model consists of the following single equation:

| (3.14) |

The behavior of the loads can be more complex. Nowadays platforms implement complex power management schemes that make hard to define a deterministic behavior for the load. For example if the load is a processor, it may perform very aggressive variations of its consumed power. In this case the power consumption can be characterized by its probabilistic behavior. This kind of behavior is not possible to capture in a static electric model. The evaluation of performance of the power supply system due the probabilistic behavior of the loads is presented in the following section. However, we still need a simple electrical model for the loads. We can describe the model of a load in two ways: as power or current consumers. The equations of a power and currents consumers are (3.15) and (3.16) respectively.

| (3.15) |

| (3.16) |

Once we have defined these simple models, it is possible to create a model of a complex architecture and evaluate its performance with a good accuracy and in a very short time.

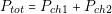

In this application, as stated before, a power architecture is evaluated considering three metrics:

The real cost of a platform is very difficult to calculate because many variables must be taken into account, for example component storage cost and engineering cost. Nerveless it is possible to calculate the cost of the components used to build the architecture. Using this approach for cost calculation it is possible to differentiate when a power architecture is more expensive than other.

In the case of size calculation, if we want to know the real size of the platform, we will need to design the real layout of the platform. As in the cost calculation, the calculated area is relative. This calculation approach is good enough to know the size difference between two power architectures. The equations to calculate the cost and size are the following:

| (3.17) |

| (3.18) |

In this equation Cn represents each of the k converters of the architecture .

We can calculate the power losses by simulating the electrical circuit of the complete architecture and obtaining the difference between the input and output power. This simulation requires only the static behavior; this means that no transient characteristic of the systems are considered. In order to avoid incorrect results in the simulation the following conditions are considered:

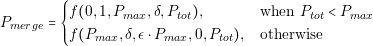

In previous section, it was shown that each converter has defined a variable called “security”. This variable has a value 1 if the converter is operating outside its specifications. Using these values, we are defining other variable that alerts if the architecture utilizes incorrectly a converter. The equation is the following:

| (3.19) |

It is possible to detect and discard invalid architectures by using this value.

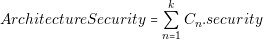

Other important consideration (as mentioned before) is that some loads may present high dynamic behavior. When the designer have this information, it is possible to calculate the average losses of the system. There are mainly three approaches (Figure 3.8):

In Figure 3.8.a is shown the current waveform of a load. Based on this waveform it is possible to obtain a representation of the instantaneous current values and its probability. The first approach is the calculation at maximum output power (Figure 3.8.b). In this case, the loads are set to its maximum power and the total losses are calculated. This operating point is important because it assure the designer that the power system can provide the required power without exceeding the limits of components. The main disadvantage of designing the power converter for this operating point is that the system is optimized only for that point. Therefore, if there is a converter that presents better performance in light load this advantage is not considered.

If we have available more detailed information about the load behavior, it is possible to characterize a few discrete states and its probability (Figure 3.8.c) and obtain an average value for losses.

| (3.20) |

In Equation (3.20) s represents the state, lossess the losses of the state and Ps the probability.

We can make a rough approximation of the average losses in cases where detailed information of the load behavior is not available. We can approximate the losses by calculating different points within a range of operation and averaging all the results. In this case, we are assuming that each calculation point has a similar probability. This case is shown in Figure 3.8.d.

The decision of the approach used will depend completely on the information available about the loads. The main drawback of the averaging approaches is that if the number of loads is very large, and the discrete states for each load is also large, the total number of states for the system may be excessive making unfeasible to calculate the average losses for all states.

This chapter presented the methodology employed to obtain very simple and useful models for the typical components of a power architecture. These models allow us to calculate the energy efficiency, size and cost of a power architecture in in a very fast way since the models consist of simple equations. We use an equation-based approach; this allows us to connect small models (through equations) and create complex models of electric circuits.

In order to calculate the cost and size of and architecture, we use a measurement that provides a relative value among the architectures. For the cost, we calculate the cost of the components; and for the size, we calculate the total size.

This is one of the possible modeling/simulation alternatives that we can employ. Nonetheless, it is necessary to notice that the architecture generation and component selection methodologies (that are presented in part two) do no rely on the approach used to calculate the performance criteria. These methodologies rely on the calculated values, not in the method used to calculate them. This gives us the flexibility of using any modeling methodology that we want.

Experience can be merely the repetition of same error often enough.

John G. Azzopardi

This chapter presents an introduction to different algorithms used in metaheuristic optimization. The objective of this chapter is to help understanding the underlying principles of these techniques.

Four different algorithms are explained, Ant Colony, Harmony Search, Simulated Annealing and Genetic Programming. These algorithms have been used as reference for the algorithms proposed in this document. This chapter also includes the basic theory used in optimization algorithms with more than one objective.

The term metaheuristic has been adopted to describe the methods that optimize a problem by iteratively trying to improve a candidate solution with regard to a given criteria. The term “Heuristic” come from Greek and means “find” or “discover”, but it is used to describe techniques that find solutions by accumulating experience. The term “Meta” can have different meanings, for example “after”, “beyond”, “with”, “adjacent” or “self”. One of the interpretations is “beyond heuristics”. The main idea in these techniques is to be able to create a better solution based on existing ones; based on experience.

Contrary to mathematical optimization methods, these techniques can be used to solve optimization problems with discontinuous parameters and without gradient information. Metaheuristic techniques have been used to solve a wide range of problems that cannot be solved with traditional mathematical optimization techniques. Some areas of application are:

The basic principle of operation of these algorithms is to create a basis of knowledge (or experience) from which a new solution is proposed. This new solution is evaluated, and according to the results, the base of knowledge is updated. This procedure is illustrated in Figure 4.1. If the same steps are performed many times, the accumulated knowledge should be enough to easily find the optimum.

Each of these algorithms has a specific way of storing the knowledge, creating new solutions and updating the knowledge. For example, the category of evolutionary algorithms uses concepts inspired by the evolution theories of Darwin. The knowledge is stored as a set of solutions (individuals) called population, and the different individuals of this population are crossed (combined) in order to obtain new solutions. These solutions are evaluated, and the population is updated by selecting the fittest individuals. These process is repeated for many generations and at the end the individuals within the population are the ones that have the best characteristics.

Genetic algorithms create new solutions (offspring) by crossing the characteristics of two individuals (parents). For example, if we are optimizing a vector of parameters, the crossover will pick some of the values from every parent to create the offspring. If the offspring has a good performance, it will survive for the next generation. More details of generic algorithms can be found in [Weise, 2009].

Other algorithms that follow these same steps (but do not use the metaphor of genetics) are classified as Evolutionary Computation algorithms. This is the case of Ant Colony Optimization [Dorigo and Blum, 2005] and Harmony Search [Geem, 2009], which will be explained in later sections.

Metaheuristic algorithms can help obtaining solution for complex combinatorial problems. The main disadvantage of these algorithms is that there is no guarantee that the global optimum will be found in all cases. This is the main reason why there is distrust by certain group of users against the use of this type of algorithms. But as will be presented in chapters 5 and 6, these techniques produce very good results.

In the following sections three algorithms from the family of “nature-inspired” algorithms will be presented. Ant-Colony algorithm is inspired in the behavior of ants seeking a path between the food and their colony. Harmony search is inspired in the music composition process. Simulated annealing is inspired in the annealing process in metallurgic industry. Genetic programming (similar to genetic algorithms) is inspired in the natural selection process.

Ant colony [Dorigo and Blum, 2005] is part of swarm intelligence algorithms. The main characteristic of these algorithms is that the knowledge (or intelligence) is distributed in a group of individuals that work in cooperative way. The ant colony was first developed with the idea of searching paths in a graph.

A real ant searching for food, starts to move in a random path until it finds food, and then the ant returns to the colony. All ants leave a trace of pheromones where they have walked. These pheromones are reinforced when other ants follow the same path, but also the pheromones evaporate as time passes. If an ant finds this trace of pheromones, it will be attracted to follow the same path. If the path is a good path, many ants will start to use this path because the amount of pheromones will be higher. In long paths the trace of pheromones is weak what makes it less attractive. After some time, if the source of food is good and the path short, all ants start to use that path between the colony and the source.

In ant colony algorithm this behavior is imitated. The pheromones track is stored in a table that contains the amount deposited in each edge. After each iteration, the amount of pheromones is decreased in order to simulate evaporation.

Figure 4.2 shows an example of how the pheromones are accumulated in three different times during the optimization. Figure 4.2.a shows the pheromones after a few iterations. After a significant number of iterations the pheromones look like in Figure 4.2.b. At the end of optimization, it can be seen in Figure 4.2.c that the pheromones are concentrated in the shortest path.

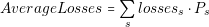

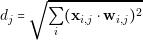

The most important part of the algorithm is the approach used to create a new solution. An ant (represented with a vertex of a graph) has to make a decision on what is the next path (edge) that it will take. The first proposed algorithm has the name Ant System [Dorigo et al., 1996]. Each edge has a value ω, that represents the probability of selecting that path. This value is defined as:

| (4.1) |

Where τn,m is the amount of pheromones in edge n to m and ηn,m is the desirability of the edge. The values α and β are used to control the influence of the pheromones and the desirability respectively. The desirability of an edge is given by the formula:

| (4.2) |

where dn,m is the distance of the edge. The probability of an ant to choose a path from edge i to j is given by:

| (4.3) |

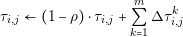

where the term P�ωi,l� represents the weight of all possible paths from edge I to any other vertex (represented by l). The pheromones on each edge are updated using the following formula:

| (4.4) |

where ρ is the evaporation rate, m is the number of ants and Δτi,jk is the quantity of pheromone laid on the edge by ant k. Depending on the success of a more or less pheromones are deposited. The formula is:

| (4.5) |

where Q is a constant and Lk is the length of the tour constructed by ant k.

Other systems have been developed in order to improve the performance of the algorithm. Some examples of these systems are MAX �MIN Ant System [St?tzle and Hoos, 2000] and the Ant Colony System [Dorigo and Gambardella, 1997].

In the Ant Colony algorithms the knowledge (or experience) is stored in the pheromone table. This pheromone table contains information about how good a given path has been. New solution are proposed based in this information.

The Harmony Search algorithm [Geem, 2009] was inspired by the observation that the aim of music is to search for a perfect state of harmony. Consider as an example a room full of musicians with detuned instruments. In periodic intervals, each one plays a single note. Based on what every musician hears, he has the opportunity to try other note or change the pitch of it instrument in order to make it sound better in combination with the rest of instruments. If we repeat over and over this process, we will reach one point in which all the instruments are tuned and the musicians are playing notes that have a good harmony.

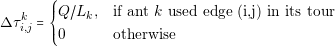

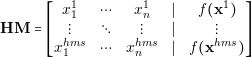

Harmony Search (HS) algorithm follows an intuitive set of rules to create new solution candidates. The central part of the HS algorithm is the Harmony Memory. Harmony Memory stores a group of solutions and it is analogous to the concept of population in evolutionary algorithms. Harmony Memory is represented as a vector of solution vectors:

| (4.6) |

where xn is a solution whose parameters are x1n,x1n to x kn. And f�xn� is the aesthetic value or objective function value.

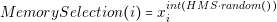

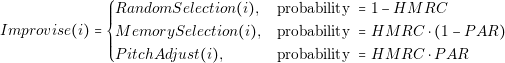

In Harmony Search algorithm a new solution is built by selecting individual values of each parameter. These new solutions are called improvisations. In the simplest version of HS there are three different operations to obtain a new value and these operations are:

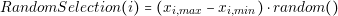

The random selection as its name says, returns a random value (xi) in the range of xi,min to xi,max. This function can be defined as follows:

| (4.7) |

where the function random�� returns a value between 0 and 1.

The memory selection operation randomly takes the value of a solution stored in the harmony memory. Memory selection function is defined as follows:

| (4.8) |

where HMS is the number of elements of the harmony memory and the function int converts a real number to integer.

The pitch adjust operation takes randomly a value from the harmony memory and performs a slight variation of the value. The function is defined as:

| (4.9) |

where bw defined the maximum deviation that the value can take.

Each function has a certain probability of being used. These probabilities are defined by HMRC and PAR. HMRC is the probability of picking a parameter from the harmony memory. PAR is the probability of adjusting the pitch of a value. The improvisation function is defined as:

| (4.10) |

These three cases are shown in a simplified way in Figure 4.3. Good values for HMRC and PAR are 0.7 and 0.3 respectively. Moreover these values can be changed during the optimization process.

Basically the way Harmony Search proposes a new solution is by combining all the existing solutions in the memory. It can be considered three level of adjust: large, small and no adjust. Large adjust is the random selection operation, this operation is particularly useful to explore different areas of the solutions space and to escape from local optima. The small adjust is performed by the pitch adjust operation. This small adjust makes a local search in order to get closer to the local optima.

Simulated annealing takes its name from metallurgy. The annealing process consists on heating a metal and then cooling it down with the objective of creating bigger crystals in the structure. A metal with bigger crystals is more resistant and has fewer defects. When the metal is heated, the energy and diffusion rate of the ions increases. This breaks the structure of the crystals, and when the metal is cooled again, the crystals are rearranged to a better structure. The simulated annealing algorithm was developed inspired by that effect [Kirkpatrick et al., 1983].

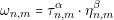

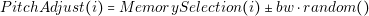

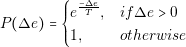

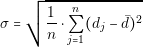

In the case of simulated annealing it is not defined a specific way about how new solutions are proposed. The algorithm defines a way how the solutions can be selected. Similar to the previous algorithms, simulated annealing works in an iterative way. Each iteration a new solution xn�1 is proposed. Each solution has an energy value that represents how good the solution is. Once the energy en�1 (fitness) is calculated, we can calculate by using the energy of the previous solution (en) a delta value :

| (4.11) |

Two cases can occur. If the energy en�1 is smaller than the energy of the previous solution en, or Δe @ 0, the probability of selecting the new solution is 1. If Δe A 0, the probability is calculated in the following way:

| (4.12) |

where T represents the temperature. Typically, the value of the temperature is decreased gradually as the number of iterations increases. It can be seen from Equation (4.12) that, when the value of temperature is high the probability of escaping, or selecting the solution xn�1 is high. As the temperature is decreased, the probabilities of escaping are smaller.

[Laarhoven and Aarts, 1987] present many applications of the simulated annealing algorithm. It is also presented a demonstration that simulated annealing presents global convergence if the number of iterations tends to infinity.

Genetic programming (GP) is an evolutionary algorithm used to find computer programs based on the same principle of all evolutionary algorithms. The central part of genetic programming is the representation of computer programs as tree structures. These tree structures are crossed and mutated in order to obtain new trees (programs) that perform a given task better.

Figure 4.4 shows the tree representation of a mathematical Equation. In a similar way, other more complex operations (like loops and conditions) can be represented.

The simpler scheme of GP creates new solutions based on two operations: crossover and mutation. Crossover operation combines two different solutions and mutation performs a modification over one solution. Figure 4.5 shows an example of the crossover operation where two trees are combined into new ones. Figure 4.6 shows the main three cases of the mutation operation: replacement, insertion and deletion. As their names say, these three operations modify the nodes by replacing, inserting and deleting sub-trees.

In some applications to avoid obtaining nonsense programs Grammars are defined. These grammars define a set of rules that the mutation and crossover operations must follow in order to obtain valid trees.

In recent days, genetic programming has acquired more acceptance because nowadays computers are very powerful. The fact of creating a program automatically and run it is easier thanks to the advances in compilers.

These are some of the areas in which genetic programming has been successfully applied [Weise, 2009]:

The concepts of genetic programming have been applied in electronics in the automatic design of hardware. One particularization is the Cartesian Genetic Programming [Miller and Thomson, 2000] that is used to evolve Boolean functions. This has been used to design optimized digital circuits.

Even when real-world optimization problems can be expressed as single objective problems, it is difficult to capture the aspects of all in a single objective. Techniques to achieve multiobjective optimization are very different from single objective and also are more difficult to implement.

In single objective problems it is very easy to determine if a solution is better than other. A solution is better than other if the fitness is closer to the target. In multiobjective optimization the concept is more difficult to define. In order to find optimal solutions, the commonly adopted concept is the one defined by Vilfredo Pareto [Pareto, 1897]. This concept is known as Pareto Optimality. A solution y that consist on a vector of k decision variables is Pareto optimal if there does not exist another x such that fi�x�B fi�y� for all i � 1,...,k and fj�x�@ fj�y� for at least one j. This means that y dominates x if one of its characteristics its better and the remaining characteristics are equal (or better).

This concept is illustrated in Figure 4.7 where each point represents a solution. Each solution has two objectives and the target is to minimize them. Each solution defines a dominance region, and any other solution inside that region is dominated.

The first thing that can be noted in multiobjective optimization is that there does not exists a single optimal solution. On the contrary, there exists a complete set of Pareto optimal solutions. Therefore, the concept of a single optimal solution cannot be achieved on most cases.

There are some considerations to take into account in order to obtain the Pareto front by using evolutionary computations. Since the effectiveness of most evolutionary techniques relies on the concept of combining elements of the population, it is very important to keep diversification in the population.

Multiobjective optimization techniques can be classified into three groups:

A brief description of each approach will be presented in the next subsections.

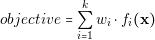

This approach consists on combining all the objectives into a single value. The most typical aggregating function is the linear sum of weights. In this approach, we need to define for each objective a value that represents its importance; a weight value. The combination of all objectives is calculated a following:

| (4.13) |

where wi represents the relative importance of the objective i.

Linear sum of weights has the disadvantage that the results are completely dependent on the weight values. Using inappropriate values can result in incorrect or non-optimal results. Other disadvantage is that it is difficult to generate the non-convex part of the Pareto front (the solutions that only minimize a single objective). Nevertheless, there are nonlinear aggregating functions that can be used to overcome this problem. The main drawback is that it is no easy to define an effective aggregating function that works for all problems.

One of the characteristics of a good evolutionary algorithm is that it has to search for the global optima among a wide space of parameters. In order to achieve that, the algorithm should try to keep individuals with very different characteristics. This concept is called “diversification”.

Population-based methods assume that in order to keep the diversity, it is possible to create a sub-population that targets each objective individually. This way the best solutions for a single objective are obtained. These solutions (that are good for a single objective) are combined among themselves with the purpose of obtaining solutions with good characteristics in all the objectives. The main problem of population-based is that this assumption may not be true to all problems. There could be some solutions, that present good characteristics in all objectives, and are discarded because they do not present good characteristics in the individual objectives.

Pareto-based approaches use the concept of Pareto dominance. This allows keeping solutions with a good trade-off among the objectives. There are many algorithms whose main objective is maintaining the diversity of the population. The central part of these algorithms is the way they define the concept of how a solution is better than other. Some of the approaches are based on the following definitions:

A solution can be better than another if:

In addition to these concepts of comparison, these algorithms implement mechanisms to improve the performance and diversification, for example limit the number of Pareto solution in a given region of the space.

Two generations of Pareto-based algorithms are defined. The first one has the characteristic that algorithms use the concepts of niching, fitness sharing and Pareto ranking. The second generation of algorithms introduces the concept of elitism. Elitism tries to keep the diversification by keeping an external population, formed by individuals with good characteristics, and combining them with the rest of the population during many generations. Some of the most popular algorithms of the second generation are:

In the following sections will be presented a brief explanation of these algorithms.

The Strength Pareto Evolutionary Algorithm (SPEA) uses two types of population. One contains only the solutions belonging to the Pareto front, and the second contains a general population. The objective of storing the Pareto front is to preserve the best solutions. On the other side, the objective of storing a general population is to preserve the diversity.

The basic flow diagram of SPEA algorithm is shown in Figure 4.8. The first step of the SPEA algorithm is to obtain all the non-dominated solutions from the population. These non-dominated solutions are combined with the stored Pareto front. If the number of the new Pareto front exceeds the maximum number of solutions, a clustering algorithm is used in order to obtain the most significant members of the Pareto. The next step is to calculate the strength of each individual in the Pareto front. The strength is a value between 0 and 1 that reflects how many individuals of the population a given solution is dominating. The next step is to calculate the fitness of the population. This value is calculated by adding the strengths of each individual belonging to the Pareto that covers the given solution. Once the strengths and fitness values are calculated, a new population is obtained based on the Pareto and the current population. The stronger individuals and the ones with a better fitness have more probabilities of being used.

The SPEA algorithm is especially useful when the optimization variables are continuous or when the number of solutions that belong to the Pareto front is very large. This benefit comes from the fact that SPEA reduce the number of stored solutions by using a clustering algorithm in order to obtain the most significant individual of a group of very close solutions. More information about SPEA can be found in [Zitzler and Thiele, 1998].

The Micro-Genetic Algorithm (Micro-GA) is an approach developed for the improving the performance of the algorithm. The algorithm is based on the concept that it is not necessary to use a very large population in order to obtain good results. Micro-GA divides the population in two types, the replaceable part and a non-replaceable part. From these two populations a very small set of individuals is selected and is used as micro-population. With this micro-population a genetic algorithm is executed with a small number of generations in order to improve the solutions. Using this improved micro-population, the replaceable population is updated and the process is repeated. In this algorithm, the non-replaceable part is used to preserve the diversity. Each step of the micro-GA search acts as a kind of local search.

Because of the size of the micro-population is very small (usually three individuals), the Micro-GA has to perform less operations thus making it faster.

The Pareto Achieved Evolution Strategy (PAES) algorithm defines a way in which the solution candidates can be selected in order to obtain the Pareto front. In Figure 4.10 a simplified flow diagram is shown. The first step is to generate a new solution candidate mutating (performing a slight modification to) an existing solution. If the existing solution dominates the new one, the new one is discarded. If the new one dominates the existing one, and none of the other members of the population dominates the new one, the new solution is included in the population. If the new solution does not dominate other solution and is not dominated by any other, a test of diversity is performed in order to decide which solution should be kept.

Other authors have presented modifications to the basic PAES algorithm, for example [Oltean et al., ] in which an adaptive representation of the coding is used in order improve the convergence of the algorithm.

In this chapter, an introduction to the metaheuristic techniques used in this thesis was presented. We have considered four popular algorithms: Ant Colony System, Harmony Search, Simulated Annealing and Genetic Programing. These algorithms have been widely used to solve problems in many areas of engineering.

In addition, it has been presented the main methods to solve multi-objective problems. These methods allow us to obtain solutions that optimize in a single run all targets defined. Using this techniques we obtain the solutions belonging to the Pareto front. These solutions have characteristic that none of them can be considered better than the others. Having this Pareto front is especially useful when we want perform a trade-off analysis in order to obtain the most appropriate solutions for the target application.

In the following chapters the applications of these methods to the design of power systems will be shown.

Don’t worry about people stealing an idea. If it’s original, you will have to ram it down their throats.

Howard H. Aiken

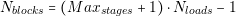

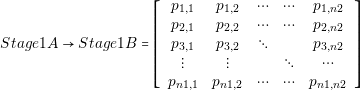

In this second part of the thesis, the main contributions to the power electronics field are presented. With the aim of solving the problem settled in the beginning of this thesis, we propose dividing the initial design task into two parts: the architecture generation and the selection of components. In order to effectively perform these two tasks we propose the design flow shown in Figure 4.11. We can see in the figure that five modules compose this design flow:

The purpose of the Converter Capture and Converter Database is to help the designer to create a library of models from different converters. The Architecture Generator and Converter Selector will use these models to create a set of optimal power systems. Finally, the Results Post Processing module will help the designer to sort, classify or visualize the results.

This design flow provides a lot of flexibility to the designer. Whiting these modules, we can cover all typical design cases. For example:

Within the following two chapters we will present a detailed explanations of these methods. The first chapter presents the techniques proposed to generate automatically power architectures and the second is focused on the converter selection.

That which is static and repetitive is boring. That which is dynamic and random is confusing. In between lies art.

John Locke

This chapter focuses on the methods proposed to generate automatically power supply architectures. We present three different algorithms. The first algorithm is the combinatorial (or brute-force) approach. This algorithm performs an exhaustive search of all possible combinations in which we can connect the converters. The second and third use custom designed metaheuristic algorithms. The Matrix-Structure is presented in the second section. This algorithm uses a grid style (or matrix) representation of an architecture and uses a metaheuristic algorithm to create elements by changing the elements in the grid. The third section presents the Tree-Shaking algorithm. This new algorithm creates architectures using branches of connected converters. In addition, this algorithm makes possible the optimization of multiple objectives.

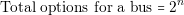

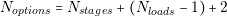

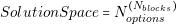

This approach is called combinatorial because it tries to find all possible combinations that can be built with a set of converters. As mentioned in the introduction, the number of architectures that we can build using a large number of converters could be extremely large. The combinatorial search, theoretically, will try to find all these solutions. However, in many problems is not possible to calculate all solutions even using a very powerful computer.

The objective of implementing a combinatorial search as first instance is to evaluate the magnitude of the problem. With this information, it is possible to get an idea about the combinatorial search limits.

Combinatorial search is based on a very basic principle, which states that a complex problem can be solved by first solving all the small problems that it comprises. This concept in computer science is called “recursion” (cf. [Graham et al., 1994] for a detailed explanation). In this specific case, a large power architecture can be built by joining small power architectures.

Consider the example of the dominoes set (see Figure 5.1). Each tile has two sides with a number from 0 to 6. These values can be analogous to the input and output voltage of a converter. If we want to know all the possible ways in which, by joining tiles, we can start a sequence in 6 and end it in a 3 we have to calculate all possible paths. Figure 5.2 shows all the possible combinations for this example without using doubles (tiles with the same number on both sides).

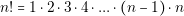

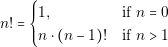

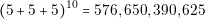

We can use a very simple algorithm to solve this problem. The algorithm basis is similar to the recursive algorithm to calculate the factorial of a number. Factorial of a number n is defined as follows:

| (5.1) |

This means that for a number n we have to perform the following calculations:

| (5.2) |

The factorial of a number, as mentioned before, has a recursive representation. The characteristic of this representation is that it calculates the factorial of a number n by first calculating the factorial of n � 1. For example, calculating the 4! requires first calculating 3!, and calculating 3! requires calculate 2! and so on. Hence, the problem is split in smaller problems that makes the solution simple. The recursive representation is the following:

| (5.3) |

It can be seen that the first case, when n � 0, returns a value 1. This condition is needed in order to calculate the factorial of 1. We can consider the first case as the algorithm “stop rule”. Without this rule, the algorithm would continue calculating over negative numbers, producing an erroneous result. In the second case, the factorial function calls itself. That is what makes it recursive.

The calculation of sequences of tiles can be represented in a recursive way. In this section the calculation of a sequence of tiles, starting with n and ending in m, will be represented as n � m, and tiles will be represented as (k,l).

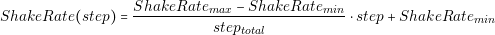

The steps to solve the previous example (6 � 3, see Figure 5.2) are represented in Figure 5.3. The first step is to take all tiles that include number 3, in this case (6,3), (5,3) and (4,3)1. In Figure 5.3.a these three tiles are shown, and it can be seen that the tile (6,3) is a solution. Now, in order to complete the sequences, it is needed to solve the same problem for 6� 4 and 6 � 5. Figure 5.3.b shows that 6� 4 has one solution (6,4), and also that it requires to solve 6 � 5. Figure 5.3.c shows the only solution for 6 � 5. All the combinations shown in Figure 5.2 can be obtained by joining these sequence of tiles. The specific way of join them is shown in Figure 5.4.